It is difficult to establish a timeline of what we now call Artificial Intelligence, as to this day the concept remains abstract for many. It is considered so broad and revolutionary that providing a concrete explanation is equally challenging. What we know is, in its simplest definition, Artificial Intelligence is a multidisciplinary science that encompasses a plethora of matter under its umbrella

In 1950, Alan Turing, a British mathematician, logician, theoretical computer scientist, cryptographer, philosopher, and theoretical biologist, and one of the fathers of computing and a precursor of modern computer science, posed a simple question: can machines think?

As a result of this question, he developed what is known as “The Turing Test,” a test in which a human engages in a conversation with a computer and another person simultaneously, without knowing which of the two is actually a machine. At the time, Turing proposed this test with the aim of understanding the ability of a machine to exhibit intelligent behavior similar to or indistinguishable from that of a human being.

In his essay “Computing Machinery and Intelligence” from 1950, Turing begins with the following words, “I propose that the following question be considered: Can machines think?”

As it was difficult to define the word “think,” Turing decided to replace this question with another: “Will there be imaginable digital computers that can perform well in the imitation game?” Turing believed this question was possible to answer, and dedicated his essay to arguing against the main objections to the idea that “machines can think.”

Controversially, numerous researchers and historians consider the starting point of Artificial Intelligence in 1956, when John McCarthy, Marvin Minsky, and Claude Shannon formally coined the term during a conference at Dartmouth College, known as the “Dartmouth Summer Research Project on Artificial Intelligence,” funded by the Rockefeller Foundation. They defined AI as “the science and engineering of making intelligent machines, especially intelligent computer programs.”

After these origins, and many years later, the academic book “Artificial Intelligence: A Modern Approach” was published in 1995, which sought to provide a concrete definition of what constitutes an intelligent machine and what Artificial Intelligence really is. Its authors, Stuart Russell and Peter Norvig, conclude that “AI is the study of agents that receive perceptions from the environment and take actions.”

Within its pages, the book explains the four important shaping factors of Artificial Intelligence: human and rational thinking (for reasoning and thought processing), human and rational action (for behavior).

Do you agree?

Patrick Winston, a computer scientist from the United States, Professor at MIT and Director of the Artificial Intelligence Laboratory, conducted his own research and defines Artificial Intelligence as “algorithms activated by constraints, exposed by representations that support models that link thinking, perception, and action.”

Recently, researcher Yoshua Bengio identifies the year 2012 as the starting point of the AI explosion, when the first commercial products that understood speech and applications that allowed content identification in images, such as what Google Photos incorporates today, were introduced.

In 2017, the most recent definition of Artificial Intelligence was coined by Jeremy Achin, CEO of DataRobot, as follows: “Artificial Intelligence is a computer system capable of performing tasks that normally require human intelligence… Many of these AI systems are based on Machine Learning, others on Deep Learning, and others on very boring things like rules.”

It is important that we can define and differentiate these two terms to get closer to understanding what AI is. Machine Learning, or automatic learning, is the process of feeding a computer with data, in which the machine uses analytical techniques to learn how to perform a task.

Deep Learning, is a type of Machine Learning directly inspired by the architecture of neurons in the human brain. It uses artificial neural networks with multiple layers to process and learn from large amounts of data.

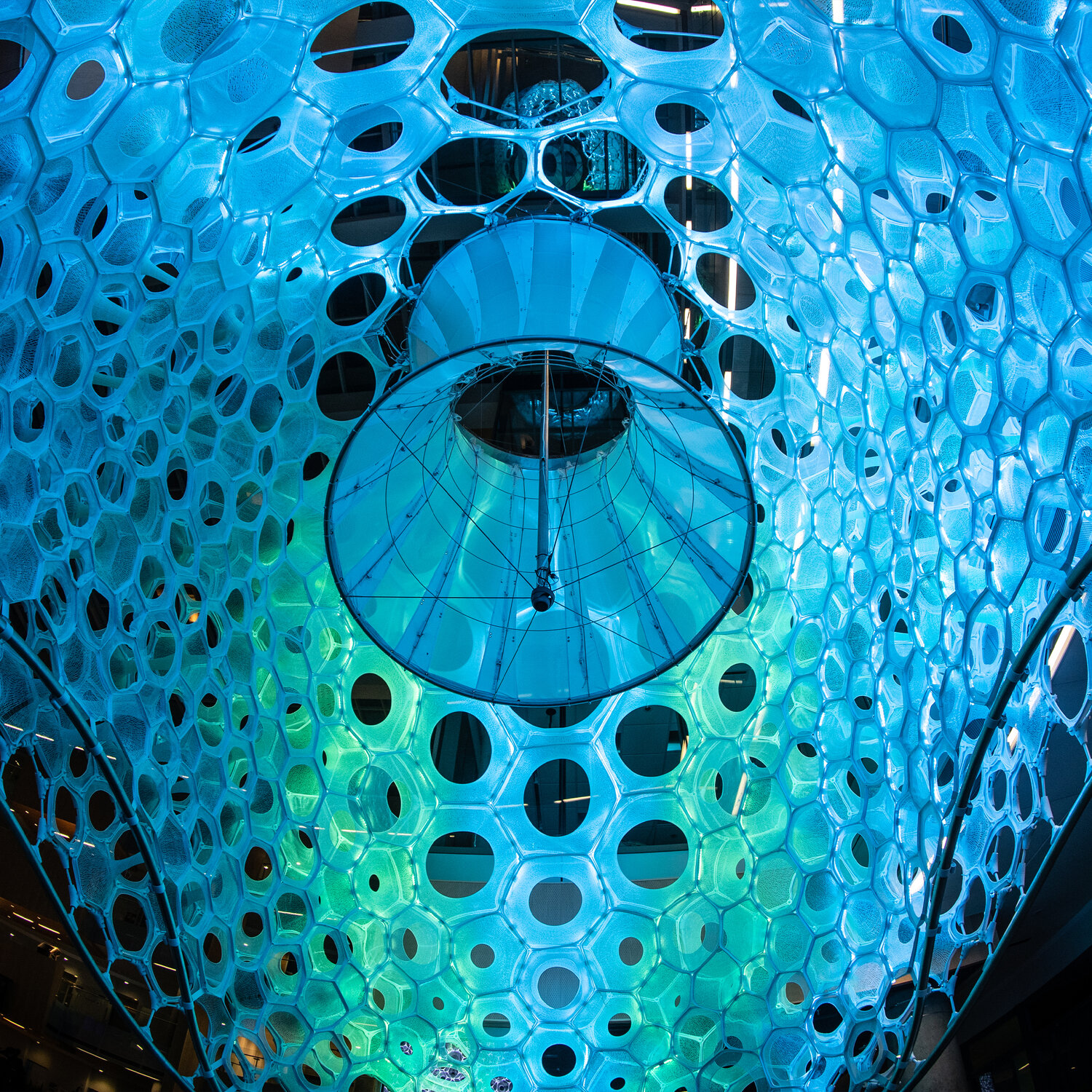

ADA by Jenny Sabin, in collaboration with Microsoft Research.

Leave A Comment